The L&D Leader's Guide to AI Adoption

Learn how successful organizations adopt AI with purpose, not hype. This practical guide covers the foundational steps for AI adoption on non-technical teams. Explore best practices for tool evaluation, workforce readiness, and change management to ensure long-term adoption success.

If you're a Learning & Development leader tasked with rolling out AI across your organization, you're probably feeling a mix of excitement and overwhelm. Your CEO wants "AI transformation." Half your team is already using ChatGPT in secret. The other half is convinced AI will either steal their jobs or produce garbage. And you? You're supposed to make sense of all this and create a coherent strategy.

Here's the good news: AI adoption for non-technical teams isn't about teaching people to code or build models. It's about helping knowledge workers use AI to improve their processes, boost productivity, and deliver better results. This guide will give you the structure you need to lead AI adoption with confidence.

Where do I even start with AI adoption?

The short answer: Start with assessment, not training.

Before you schedule a single workshop or buy a single AI tool license, you need to understand where your organization actually stands. The biggest mistake L&D leaders make is treating AI adoption like a traditional training rollout, where you can simply cascade content from the top down.

AI adoption is messier. Some people are already power users. Others haven't touched it. Some teams have found game-changing use cases. Others are still skeptical. You need a baseline.

Take an AI Adoption Assessment to benchmark your organization across five critical dimensions:

Manager AI literacy

Team adoption behaviors

Process integration

Output quality and business impact

Cultural readiness

Use our free AI Adoption Assessment Framework to evaluate where your teams stand and identify specific gaps before you invest in training.

How do I deal with the "secret AI users"?

Secret users are already using ChatGPT for everything. They've discovered Claude, Perplexity, and three other tools you've never heard of. They're convinced they're AI experts because they got a good response once. And they’re amazing to have on your team. Because most of the time they love to talk about what they’re learning.

The temptation is to either ignore them (they're already trained, right?) or crack down on them (shadow IT concerns, data security, etc.). Neither approach works.

Instead, enlist them as champions. And give them structure.

Here's how:

1. Identify Your Power Users

Run a simple survey or hold listening sessions to find out who's already experimenting. Ask questions like:

What AI tools are you currently using?

What's the best result you've achieved with AI?

What's been your biggest frustration?

2. Give Them a Framework

Power users often have great instincts but lack systematic approaches. They might get lucky with prompts but can't replicate results consistently. Teach them:

Structured prompting techniques

How to evaluate AI outputs critically

When to use (and not use) AI

Ethical guardrails

3. Create a Champions Program

Turn your power users into peer educators. Have them:

Share use cases in team meetings

Document their workflows

Mentor skeptics one-on-one

Test new tools and provide feedback

Channel their enthusiasm into organizational learning rather than letting it remain fragmented individual experimentation.

How do we handle people who don’t want to use AI?

Handling resistance to AI requires conversation and honesty. Not everyone is excited about AI. Some people are genuinely worried about job security. Others have tried it and gotten mediocre results. And AI can be quite problematic, from the impact on the environment and raising our energy bills to ethical issues like scaling bias. Still others just don't see the point.

Resistance isn't a training problem. It’s often a change management problem. Through quality conversations you can find out why someone doesn’t want to use AI and find ways to work with them. A few examples:

"I tried it and it didn't work."

The real issue: They don't know how to prompt effectively or evaluate outputs

Your move: Focus on skill-building, not tool promotion. Show them the anatomy of a good prompt. Teach them how to iterate.

"It's going to replace my job."

The real issue: Fear and lack of clarity about the future

Your move: Be transparent about how AI fits into your org's strategy. Show examples of augmentation, not replacement. Emphasize that people who use AI well will be more valuable, not less.

"I don't trust it."

The real issue: Valid concerns about accuracy, bias, or ethical use

Your move: Teach critical evaluation skills. Show them how to fact-check, when to verify, and where AI fails. Create clear policies around appropriate use.

"I don't have time to learn another tool."

The real issue: They're overwhelmed and don't see immediate ROI

Your move: Start with their pain points. Find one repetitive task AI can eliminate. Get them a quick win. Build from there.

In the end if someone doesn’t want to use AI, that’s ok. That’s their choice. Focus on those who do want to use it.

How do I choose which AI tools to standardize?

Don’t start with tools. You probably can't (and shouldn't) standardize on just one tool in the beginning. Different teams have different needs. Marketing might thrive with Jasper. Sales might love ChatGPT for email drafting. HR might need something specialized for job descriptions. Customer service might require a custom chatbot.

Instead of picking one tool, focus on building AI fluency that transfers across tools.

Teach principles, not platforms:

How to write effective prompts (these work across all LLMs)

How to break complex tasks into AI-friendly steps

How to evaluate and refine outputs

How to integrate AI into existing workflows

That said, you do need some guardrails. Work with IT and Legal to:

Define which tools are approved for which types of data

Create clear policies about confidential information

Establish guidelines for client-facing AI use

Set expectations for output verification

What should my AI fluency training actually cover?

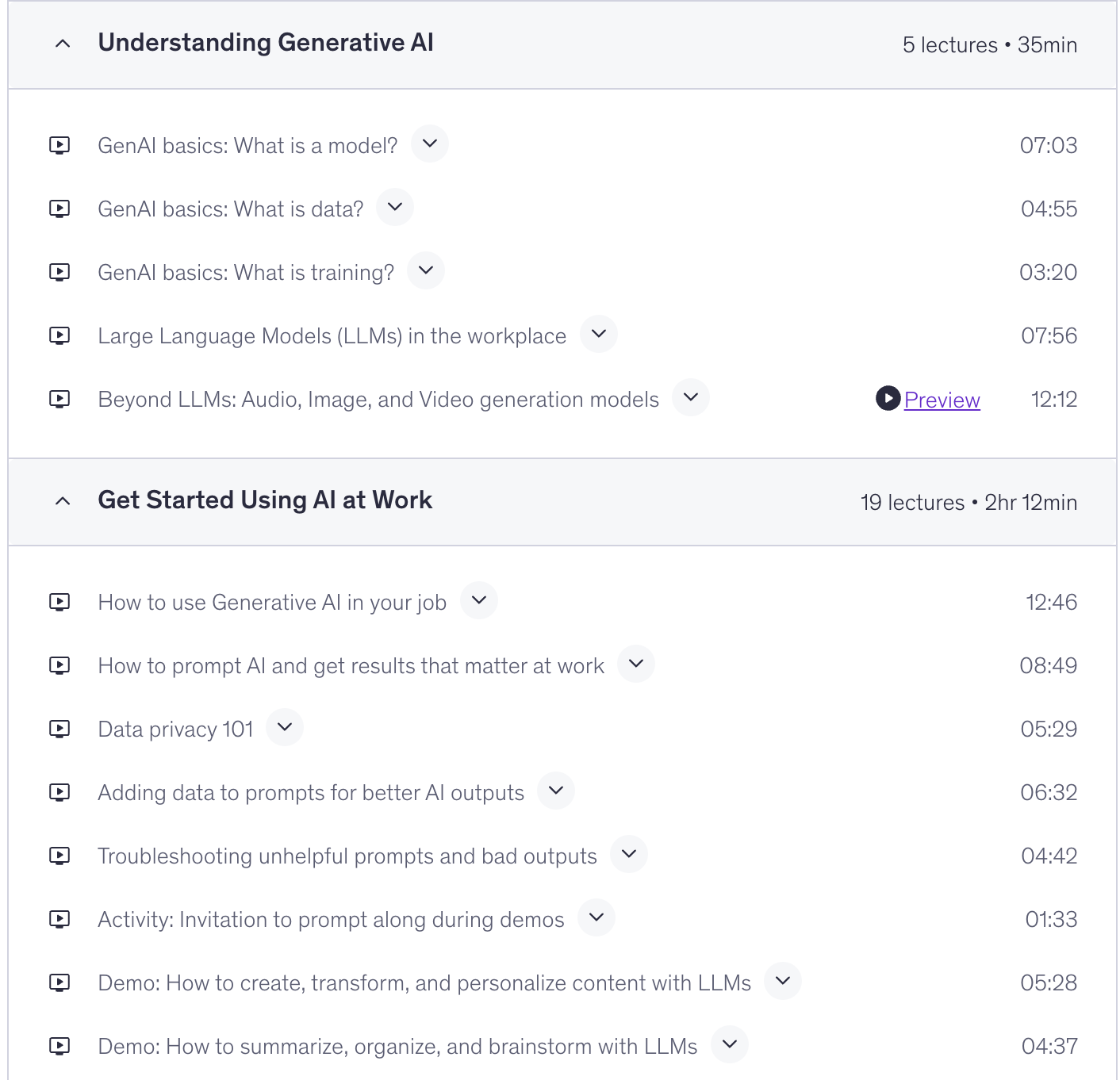

Let’s start with what not to do: Create a 2-hour presentation about "Introduction to AI" that covers the history of neural networks, the difference between machine learning and deep learning, and a demo of ChatGPT writing a poem. That will instantly make a non-technical tune out. (Boring AI was created specifically for this reason)

Non-technical teams don't need to understand transformers. They need to know how to get their work done better.

Your training should be hands-on, relevant, and immediately applicable.

AI Fundamentals

Practical Prompting

Critical Evaluation

Workflow Integration

We follow this structure in our online AI literacy course for beginners, with over 5,000 AI learners completing it (we’re a fan of it!)

How Do I Measure Success?

The two most common metrics are: # of people using the AI tool and number of people completing the training. And both metrics are wildly unhelpful when you’re trying to measure the impact of upskilling your employees for AI.

Congratulations, you trained 500 people! 428 people are using the tool! But how many of them are actually using AI in their daily work? How many processes were changed or improved with AI? How much time has been saved?

There are better metrics for AI adoption success out there. A few of them:

Adoption rate: What percentage of trained employees are using AI weekly?

Use case diversity: How many different applications have teams found?

Productivity gains: Can you measure time saved or output increased?

Quality improvements: Are the results better than before?

Cultural indicators: Are people talking about AI openly? Sharing tips? Asking for help?

Below are some ways to track those tricky AI metrics. Use these questions to shape discussions in your next stakeholder meeting:

Monthly pulse surveys:

How often are you using AI tools?

What's working well?

What roadblocks are you hitting?

What support do you need?

Use case documentation:

Create a shared space where people document their AI workflows

Celebrate wins publicly

Analyze patterns to identify what's working

Manager check-ins:

Managers should be asking about AI use in 1-on-1s

Not as surveillance, but as support

"What have you tried with AI this week?"

"Where are you stuck?"

Business impact tracking:

Partner with team leads to identify measurable outcomes

Before/after time studies on key tasks

Quality assessments on AI-assisted work

Customer satisfaction for AI-enhanced processes

What’s the role of managers in AI adoption efforts?

Your managers are critical in AI Adoption (which is why we’re so focused on it). If managers aren't using AI themselves, your adoption efforts will fail. If they’re not actively engaged with their teams about how they’re using AI, what’s working and what’s not, AI adoption will fail. Point blank.

Managers set the tone. If they view AI training as "something for the team" but don't engage themselves, everyone will treat it as optional. If they model AI use, celebrate wins, and coach their teams through challenges, adoption accelerates.

Managers should complete the same core training as their teams, PLUS management-specific AI training. They should be your most fluent AI users, not just cheerleaders.

How do I handle data security and ethical concerns with AI?

Don't wait until something goes wrong to address these.

From day one, your training should include:

Clear responsible AI use policies:

What information can and cannot be entered into AI tools

How to anonymize data when using AI

Which tools are approved for which purposes

What to do if someone accidentally shares sensitive information

Ethical Frameworks

How to recognize and mitigate bias in AI outputs

When AI use might be inappropriate or unfair

How to maintain transparency with clients and stakeholders

What to do when AI produces problematic content

Verification Standards

All AI outputs must be reviewed by a human

Fact-checking requirements for different types of content

Quality control processes for client-facing work

Documentation of AI use where relevant

Make these topics ongoing conversations, not one-time trainings. Include ethics scenarios in every workshop. Discuss real situations your teams have encountered. Create psychological safety for people to ask questions and raise concerns.

How to implement a realistic AI adoption timeline

AI adoption is a marathon, not a sprint.

Here's a rough timeline for most organizations:

Months 1-2: Assessment and Planning

Conduct AI adoption assessment

Identify power users and champions

Survey team needs and concerns

Develop training curriculum

Establish policies and guardrails

Months 3-4: Pilot Training

Train managers first

Launch champions program

Run pilot workshops with volunteer teams

Gather feedback and iterate

Months 5-6: Scaled Rollout

Roll out training organization-wide

Launch use case documentation system

Establish regular office hours or support channels

Begin tracking adoption metrics

Months 7-9: Integration and Optimization

Refine workflows based on what's working

Address adoption gaps in specific teams

Advanced training for power users

Measure and communicate business impact

Months 10-12: Sustainability

Make AI fluency part of onboarding

Regular refresher sessions

Continuous improvement of materials

Strategic planning for next-level adoption

Year 2 and Beyond: Maturity

AI fluency becomes embedded in organizational culture

Teams drive their own experimentation

L&D shifts from teaching basics to enabling advanced use cases

Continuous innovation and adaptation as AI tools evolve

How do we keep up with how fast AI is changing?

It’s impossible to keep up. So don’t. Your job isn’t to learn everything. But you can build a learning system.

AI tools are evolving rapidly. New capabilities drop weekly. What worked last month might be outdated today. You cannot possibly keep pace with every development.

Instead of trying to be the expert on every tool, become the expert on building AI fluency.

Create a Learning Ecosystem

1. Establish a community of practice

Regular (monthly) sharing sessions where teams present use cases

A Slack channel or Teams space for questions and tips

Peer-to-peer learning opportunities

Recognition for innovation and experimentation

2. Curate, don't create everything

Follow key AI educators and practitioners

Share relevant updates and resources

Filter what's actually useful for your teams

Focus on transferable skills, not tool-specific tutorials

3. Build feedback loops

Regular pulse checks on what's working

Quick surveys after training sessions

Office hours where people can bring real problems

Continuous iteration of your materials

4. Partner with power users

They're often ahead of you on tools—that's okay!

Tap them to test new capabilities

Have them share discoveries with the team

Collaborate on updating training materials

What If Leadership Doesn't Support This?

This is the toughest situation to be in.. You're excited about AI adoption, but your leadership is lukewarm or skeptical. So start with building the use case for AI adoption and training.

Don't lead with "AI is the future." Lead with business impact.

Gather evidence:

Efficiency gains: If Marketing can draft social posts 3x faster with AI, calculate the time savings

Quality improvements: If Sales emails get better response rates when AI-assisted, measure it

Cost savings: If Customer Service can handle more inquiries with AI support, quantify it

Competitive risk: If competitors are leveraging AI and you're not, what's the cost of falling behind?

Start Small and Prove Value

If you can't get budget for a full program:

Run a pilot with one team

Use free tools to minimize costs

Document everything

Measure outcomes rigorously

Present results to leadership

Often, a small success story is more convincing than any presentation about AI's potential.

Frame It as Risk Management

AI adoption isn't optional anymore. Your employees are already using it—probably without guidance, policies, or oversight. The question isn't "Should we embrace AI?" It's "Should we guide our people in using it responsibly, or leave them to figure it out alone?"

Shadow AI use is real and risky. Training and policies reduce that risk.

And also, AI can help with that. Here’s a helpful little prompt:

My leadership isn’t supportive of AI training yet. I need to make a clear, evidence-based business case. Help me:

Pick one or two small use cases where AI could make an impact in my company.

Show potential business results such as:

Time or cost savings

Quality improvements

Risk of falling behind competitors

Create a short pilot plan using free or low-cost tools.

Frame AI training as risk management — employees are already using AI, so training helps guide them safely and effectively.

Write a short, persuasive summary I can share with leadership that focuses on business impact, not hype.

The most common mistakes L&D makes with AI adoption

We know it’s hard out there. Most L&D teams are doing a lot with a little, and they’re still trying to figure out how to use AI in their own org. So let’s look at where the challenges are at and how to overcome them:

1. Treating AI adoption like traditional training AI fluency requires practice and iteration, not just content consumption. Hands-on workshops beat webinars every time.

2. Focusing on tools instead of skills Tools change. Skills transfer. Teach prompting principles, not just "how to use ChatGPT."

3. Ignoring the skeptics Resistance is data. Listen to concerns, address them directly, and meet people where they are.

4. One-and-done training AI fluency isn't a checkbox. It requires ongoing support, community, and iteration.

5. Underestimating change management This isn't just about learning a new tool. It's about changing how people work. Treat it like the organizational transformation it is.

6. Not measuring what matters "Training completion rate" is vanity. Adoption, application, and impact are what count.

7. Trying to do it all yourself Build a community. Leverage champions. Partner with managers. You can't scale AI adoption alone.

Final Thoughts: Your Role as an L&D Leader

AI adoption is one of the most significant changes you'll lead in your career. It's messy, fast-moving, and sometimes overwhelming. But it's also an incredible opportunity to transform how your organization works.

Your job isn't to be the AI expert. Your job is to be the learning architect.

You're building the systems, structures, and culture that enable your people to learn, experiment, and improve. You're creating psychological safety for people to try new things and fail forward. You're turning pockets of excellence into organizational capability.

This is hard work. But it's also some of the most interesting, wild work you can do as an L&D leader.